As some of you may know, I’ll be attending the ITExpo in Miami Beach, Florida. The subject I’ll be lecturing about is “Virtualizing Asterisk”. However, I have to be honest, I really need to change the subject to be called “Asterisk in the Cloud“.

Ever since the introduction of Amazon EC2, people had been trying to get Asterisk to run properly inside an EC2 instance. While installing a vanilla Asterisk on any of the Fedora/RedHat variant instances in EC2 isn’t much of a hassle, getting the funky stuff to work is a little more tricky.

One of these tricky bits (which I hadn’t yet found a solution for) is the issue of supplying a timer for Asterisk’s MeetMe application. In the old days (prior to Asterisk 1.6), Asterisk required the utilization of a virtual timer driver, provided by Zaptel in the past and now the DAHDI framework. The problem is, that while you are fully capable of compiling and installing DAHDI on an Amazon EC2 instance – the problem starts once you want to use it.

A few words about Amazon EC2

For those not familiar with Amazon EC2, its general infrastructure is based upon the XEN virtualization project. XEN is a para-virtualization framework, meaning that is performs some of the work utilizing the underlying Operating System kernel and some of the work performed with a special Kernel in the virtualized Operating System instance. This poses an interesting issue with every type of application that relies on hardware resources and their emulation.

To learn more about the XEN project, go to http://www.xen.org.

So, where’s the big deal?

So, if you can compile your code and run it in an instance, as long as you have the kernel headers and kernel source packages – you should be just fine – right? WRONG!

Amazon EC2 deploys its own Kernel binary image upon bootstrap, causing what ever compilation you may have done to the Kernel to go away (unless you’re creating a machine from real scratch). Another issue is a version skew between the installed Operating System kernel modules, the actual kernel and the installed compiler. For example, the instance that I was using had the XEN capable kernel compiled with gcc version 4.0.X, while the installed operating system was gcc version 4.1.X – so, no matter what I did to compile my kernel modules or binary kernel, I would always end up in a situation where loading the newly compiled kernel modules will generate an error.

Did I manage to solve it? – NOT YET. I’m still working on it, and I have to admit, that considering the fact that I have over 10 years of Linux experience and had compiled kernels from scratch many times, this one has gotten me a little baffled – I guess I’ll just need a few more nights and a case of Red-Bull to crack this one open.

So, what can we do with EC2?

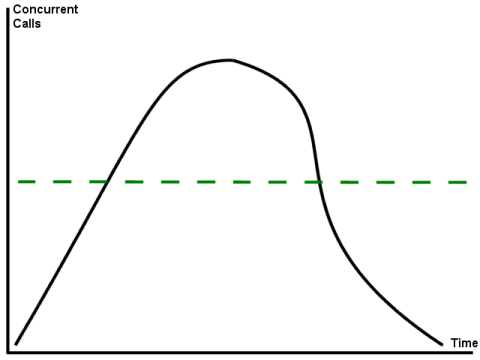

In my view, EC2 + Asterisk is the ultimate IN/NGN services environment – and I have proof of that. A recent lab test that I did with one of my customers showed a viable commercial alternative to Sigvalue when using Asterisk and EC2 structures. The main reason for our belief in using EC2 was the following graph:

What we’ve noticed was that while our IN/NGN system was generating traffic, it’s general usage showed peak usage for a period of 2.5 hours, with a gradial increase and decrease over a period of almost 10 hours. Immediately that led us to a question: “Can we use Amazon EC2 to provide an automatd scaling facility for the IN/NGN system, allowing the system to reduce its size as required?”

To do this, we’ve devised the following IN/NGN system:

Our softswitch would have a static definition of routing calls to all our Asterisk servers, including our EC2 instances which had static Elastic IP numbers assigned to these. The EC2 Controller server was incharge of initiating the EC2 instances at the pre-defined times, mainly, 30 minutes prior to the projected increase in traffic. Once the controller reaches its due timer, it will automatically launch the EC2 instances required to sustain the inbound traffic.

For our tests, we’ve initiated 5 AMI instances, using the EC2 c1.medium instance. This instance basically includes 2 cores of an AMD opteron, about 8GB of RAM and about 160GB of Hard drive – more than enough. Initially, we’ve started spreading the load evenly across the servers, reaching about 80 concurrent channels per instance, and all was working just fine. We managed to reach a point where we were able to sustain a total of about 110 concurrent channels per instance, including the media handling – which is not too bad, considering that we are running inside a XEN instance. The one thing that made the entire environment extremely light weight is the GTx Suite of APIs for Asterisk. Thanks to the GTx Suite of APIs, scalability is fairly simple, as all application-layer logic is controlled from a central business logic engine, serving the Asterisk servers via an XML-RPC based web service. Thanks to Amazon, practically infinite, bandwidth allocation – the connections from the Asterisk servers to the US based central business logic was set at a whopping 25mSec, thus, there was no visible delay to the end user.

It is clear that the utilization of Asterisk and EC2 operational constructs can allow a carrier to establish their own IN/NGN environment. However, how these are designed, implemented and operated are at the hands of the carrier – and not a specific vendor. If the carriers around the world will take to this approach, time will tell. As a recent survey stated that 18% of the US PBX market is currently dominated by Open Source solution, having Digium dominate 85% of these 18% (~15%), I’m confident that we will see this combination of solutions in the near future.